Large Language Model Security Professional (LLMSP) Certification | ndustry-Standard LLM Security Exam

Large Language Model Security Professional (LLMSP) Certification

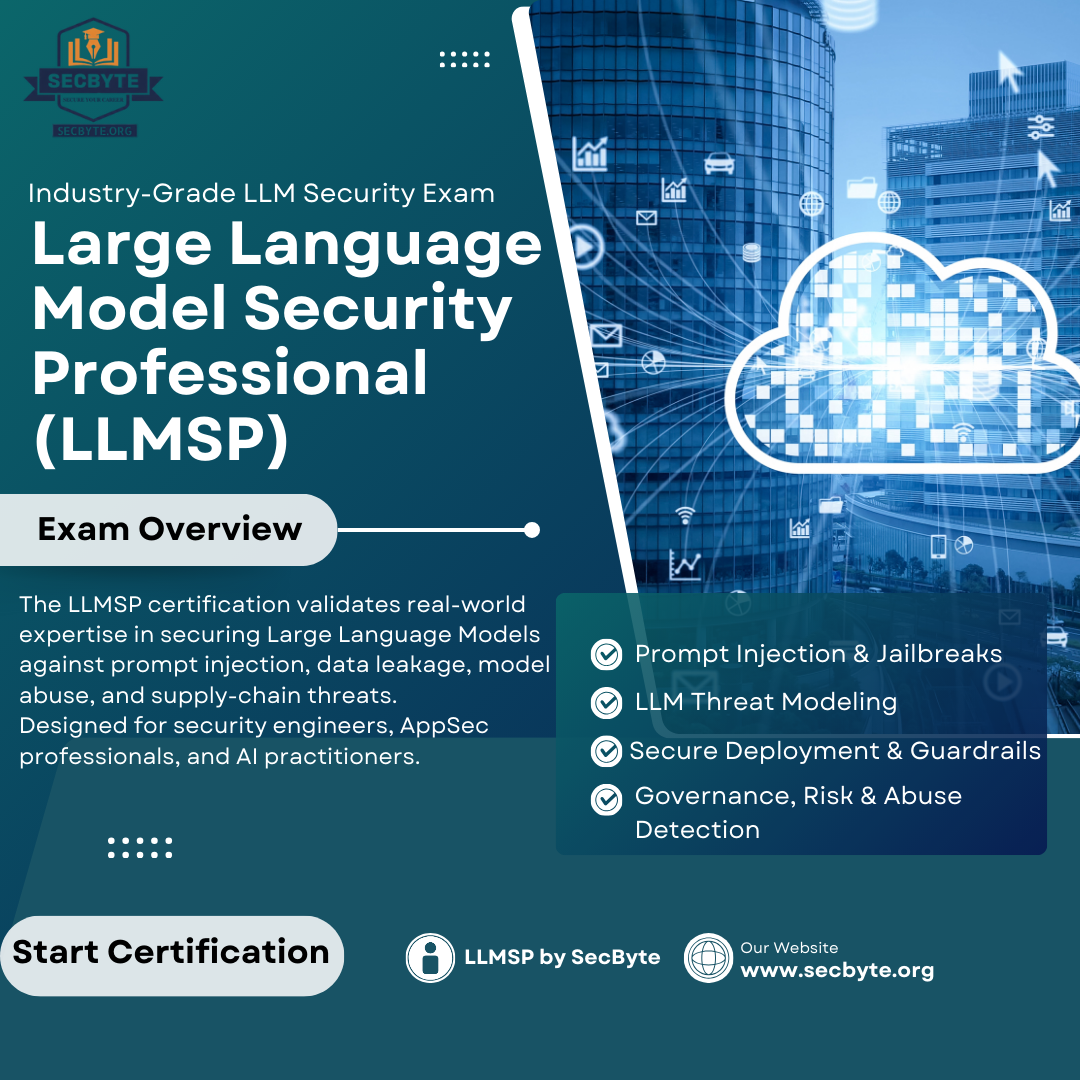

The Large Language Model Security Professional (LLMSP) certification is an industry-grade, vendor-neutral professional exam designed to validate real-world expertise in securing Large Language Models (LLMs) across modern enterprise environments. As organizations increasingly deploy LLMs into production systems—ranging from customer support bots to autonomous decision-making pipelines—the security risks associated with these systems have become mission-critical.

Traditional application security certifications no longer fully address the unique threat landscape introduced by LLMs. The LLMSP certification fills this gap by focusing exclusively on LLM-specific attack vectors, defensive controls, governance challenges, and operational security risks.

Why LLMSP Exists

Large Language Models introduce new classes of vulnerabilities that do not exist in traditional software systems. These include prompt injection attacks, training data leakage, indirect prompt manipulation, model abuse, output poisoning, and AI-driven social engineering at scale. Many organizations deploy LLMs without fully understanding these risks, often relying on surface-level safeguards that fail under real-world adversarial conditions.

LLMSP was created to establish a clear professional benchmark for individuals responsible for building, deploying, securing, auditing, or governing LLM-powered systems.

This certification is not theoretical. It is scenario-driven, adversary-aware, and grounded in real enterprise security practices.

Exam Overview

-

Exam Duration: 6 Hours

-

Total Questions: 600

-

Question Format: Multiple-Choice Questions (MCQs)

-

Style: 100% Scenario-Based

-

Delivery Mode: Individual, proctored (recommended)

-

Certification Type: Vendor-Neutral

-

Difficulty Level: Professional to Advanced

Each question places the candidate in a realistic security scenario, requiring analysis, decision-making, and prioritization rather than memorization. There are no trivia questions, definitions, or purely academic prompts.

Who Should Take the LLMSP Certification

The LLMSP certification is designed for professionals who already understand security fundamentals and want to specialize in AI and LLM security.

Ideal candidates include:

-

Application Security Engineers

-

Security Architects

-

AI / ML Engineers working with LLMs

-

DevSecOps Engineers

-

Cloud Security Engineers

-

Red Team and Blue Team professionals

-

GRC professionals responsible for AI governance

-

Security consultants advising on AI adoption

This exam is not beginner-level. Candidates are expected to have prior exposure to security concepts, threat modeling, and modern software architectures.

What Makes LLMSP Industry-Grade

1. Scenario-Based Evaluation

Every question is rooted in a realistic deployment, attack, or incident scenario. Candidates must choose the most effective, most appropriate, or highest-impact response.

2. Focus on LLM-Specific Threats

Unlike general security exams, LLMSP covers threats that are unique to language models, including prompt chaining attacks, context manipulation, and output trust failures.

3. Defensive and Offensive Balance

Candidates are evaluated on both how attacks work and how to design resilient defenses, ensuring a complete understanding of the threat lifecycle.

4. Vendor-Neutral by Design

LLMSP avoids dependency on any specific AI provider, cloud platform, or proprietary framework. Concepts are applicable across environments.

5. Enterprise-Ready Mindset

Questions emphasize risk reduction, blast-radius containment, governance, monitoring, and incident response, not just technical exploits.

📚 LLMSP Exam Modules (30 Modules)

-

Foundations of Large Language Models

-

LLM Architectures and Deployment Models

-

Threat Modeling for LLM Systems

-

Prompt Injection Fundamentals

-

Advanced Prompt Injection Techniques

-

Indirect Prompt Injection Attacks

-

Prompt Chaining and Context Abuse

-

Jailbreaking and Safety Bypass Methods

-

Training Data Leakage Risks

-

Model Inversion and Extraction Attacks

-

Sensitive Data Exposure via Outputs

-

LLM API Security Risks

-

Authentication and Authorization for LLM Services

-

Secure Prompt Engineering Practices

-

Output Validation and Content Filtering

-

Guardrails, Sandboxing, and Isolation

-

Monitoring and Detection of LLM Abuse

-

Rate Limiting and Abuse Prevention

-

LLM Supply Chain Security

-

Third-Party Plugin and Tool Risks

-

Secure Fine-Tuning Practices

-

Privacy, PII, and Data Governance

-

AI Governance and Policy Design

-

Regulatory and Compliance Considerations

-

Incident Response for LLM Security Events

-

Red Teaming LLM Applications

-

Blue Team Defensive Strategies

-

Secure LLM Integration in Enterprise Apps

-

Risk Assessment and Business Impact Analysis

-

Future Threats and Emerging LLM Attack Vectors

Skills Validated by LLMSP

By earning the LLMSP certification, candidates demonstrate the ability to:

-

Identify and assess LLM-specific security risks

-

Detect and mitigate prompt injection attacks

-

Design secure LLM architectures

-

Implement effective guardrails and controls

-

Evaluate AI governance frameworks

-

Respond to LLM-related security incidents

-

Advise organizations on secure AI adoption

Why Employers Value LLMSP

As AI adoption accelerates, organizations struggle to find professionals who understand both security and LLM behavior. LLMSP signals that the holder can operate at the intersection of AI engineering, security, and risk management.

It provides confidence that the certified professional can:

-

Prevent costly AI-driven data breaches

-

Reduce legal and compliance exposure

-

Secure customer-facing AI systems

-

Protect organizational reputation

- Certification

- Any

- 1 Section

- 0 Lessons

- 6 Hours

- Large Language Model Security Professional (LLMSP)1

Enroll This To Start Learning From Today.

**“The LLMSP certification is the first exam I’ve seen that truly reflects the real-world security challenges of deploying Large Language Models in production. Every question forces you to think like both an attacker and a defender. There are no shortcuts, no memorization tricks—only practical decision-making under realistic constraints.

This exam sets a new benchmark for AI security certifications. Anyone responsible for securing LLM-powered applications should consider LLMSP essential.”**

You might be interested in

Sign up to receive our latest updates

Get in touch

Call us directly?

Visit Us

Need some help?

Partners List

- © 2026 SecByte.org rights reserved.